Anjie Le is a second-year DPhil student in Biomedical Engineering at the University of Oxford supervised by Prof. Alison Noble, CBE FRS FREng FIET, specializing in medical AI, ultrasound imaging, and continual learning. With a BA in Mathematics and an MPhil in Data Intensive Science from the University of Cambridge, Anjie combines a strong theoretical foundation with a drive for clinically impactful innovation. Their research focuses on advancing medical large vision-language models (LVLMs), developing robust unlearning algorithms, and improving real-world generalization in medical imaging.

Publications

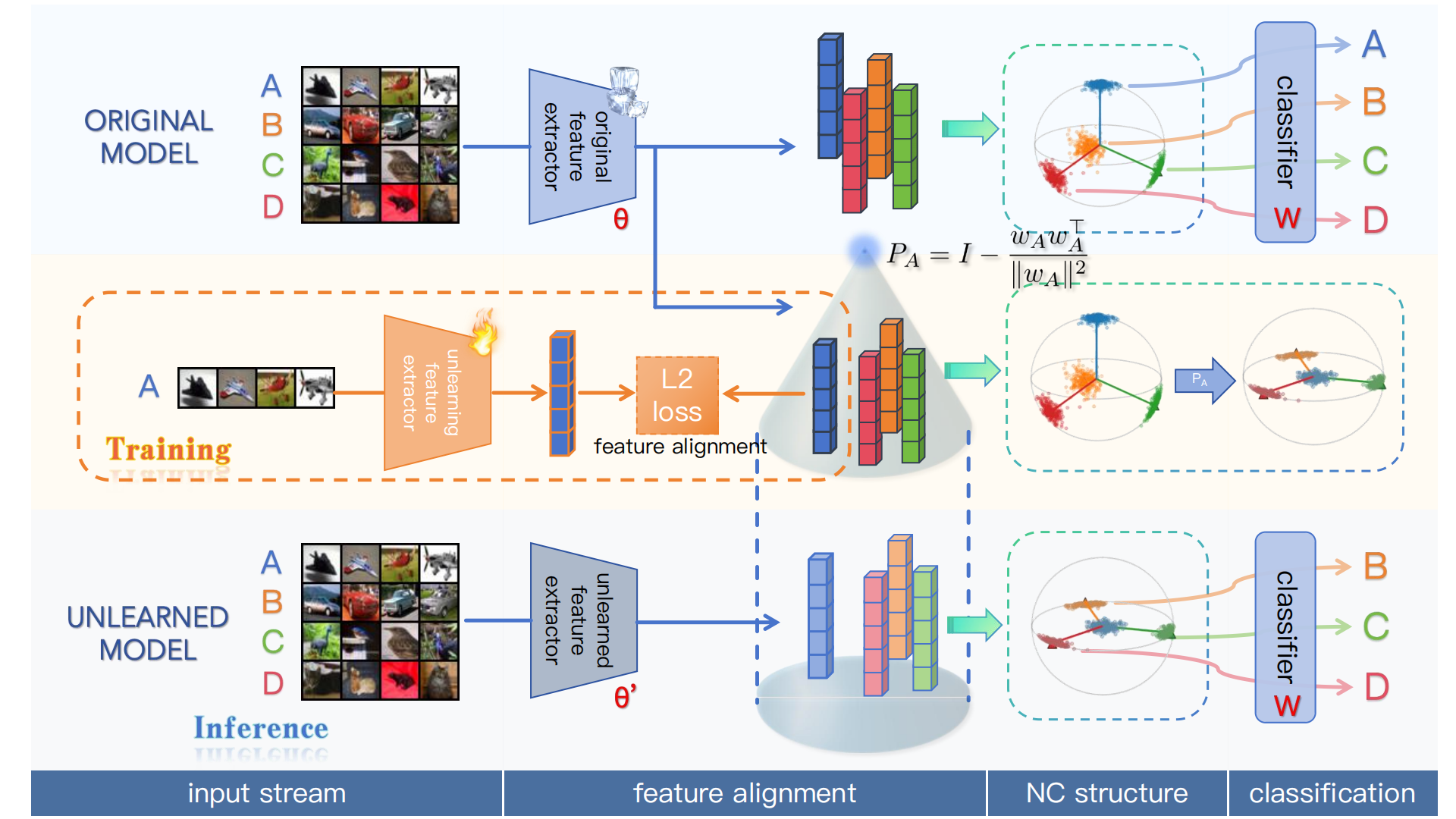

POUR: A Provably Optimal Method for Unlearning Representations via Neural Collapse

Anjie Le, Can Peng, Yuyuan Liu, J. Alison Noble

arXiv (Nov 2025)

- Introduces representation-level weak unlearning and the Representation Unlearning Score (RUS).

- Proves that orthogonally projecting away the forgotten class direction preserves Neural Collapse geometry: the projection of a simplex ETF remains a simplex ETF, and that the projection preserves Bayes optimality.

- Introduces unlearning methods POUR-P, a closed-form projection operator that performs instantaneous forgetting, and POUR-D, a projection-guided distillation method that propagates forgetting into deep encoders.

- Touches on unlearning under domain shift and cross-modal unlearning.

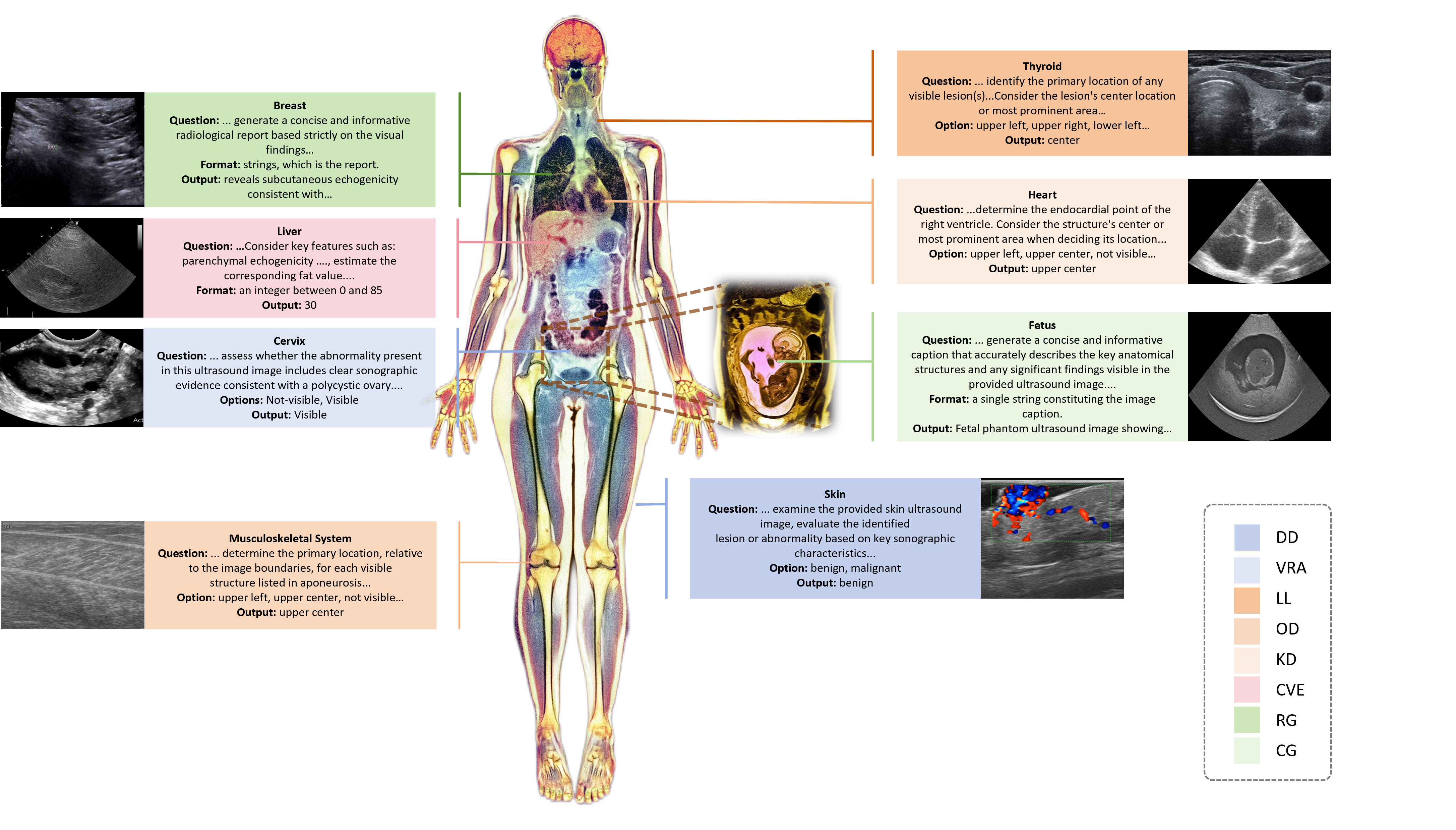

U2-BENCH: Benchmarking Large Vision-Language Models on Ultrasound Understanding

Anjie Le, Henan Liu, Yue Wang, Zhenyu Liu, Rongkun Zhu, Taohan Weng, Jinze Yu, Boyang Wang, Yalun Wu, Kaiwen Yan, Quanlin Sun, Meirui Jiang, Jialun Pei, Siya Liu, Haoyun Zheng, Zhoujun Li, J. Alison Noble, Jacques Souquet, Xiaoqing Guo, Manxi Lin, Hongcheng Guo

arXiv (May 2025)

- Presents the first comprehensive benchmark evaluating large vision-language models (LVLMs) across diverse ultrasound tasks—including classification, detection, localization, and report generation—across over 7,200 cases and multiple anatomical regions, highlighting both strengths and areas for improvement in ultrasound understanding.

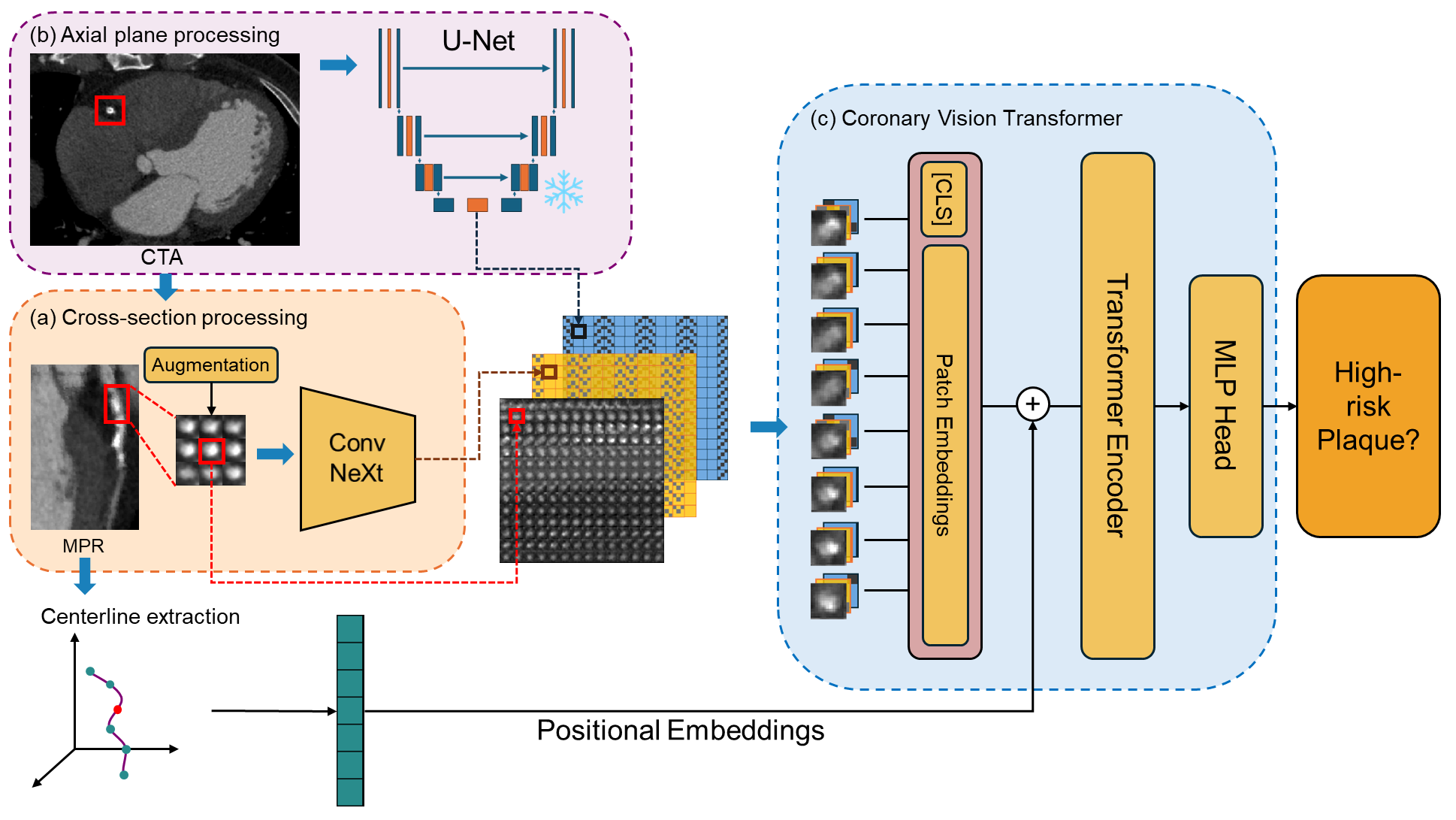

ViTAL-CT: Vision Transformers for High-Risk Plaque Classification in Coronary CTA Anjie Le, Jin Zheng, Tan Gong, Quanlin Sun, Jonathan Weir-McCall, Declan P. O’Regan, Michelle C. Williams, David E. Newby, James H.F. Rudd, Yuan Huang MICCAI 2025

-

Proposes the first segmentation-free Vision Transformer framework for high-risk plaque classification in coronary CTA, integrating multi-scale cross-sectional, axial, and geometric context via a hybrid ViT-ConvNeXt-U-Net architecture.

-

Validated on 3,068 coronary arteries from the SCOT-HEART study, achieving state-of-the-art performance (AUC 0.818, F1 0.749) and outperforming both ViT and CNN baselines.

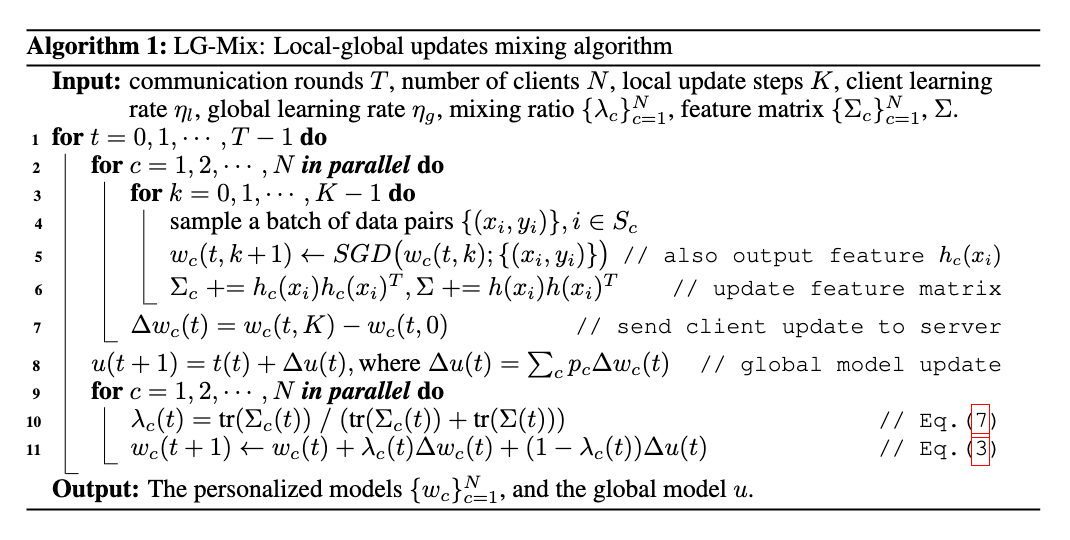

Heterogeneous Personalized Federated Learning by Local-Global Updates Mixing via Convergence Rate

Meirui Jiang, Anjie Le, Xiaoxiao Li, Qi Dou

ICLR 2024

- Introduces a novel method for federated learning that dynamically blends local and global model updates, grounded in convergence rate theory, enabling personalized model adaptation across heterogeneous data sources.

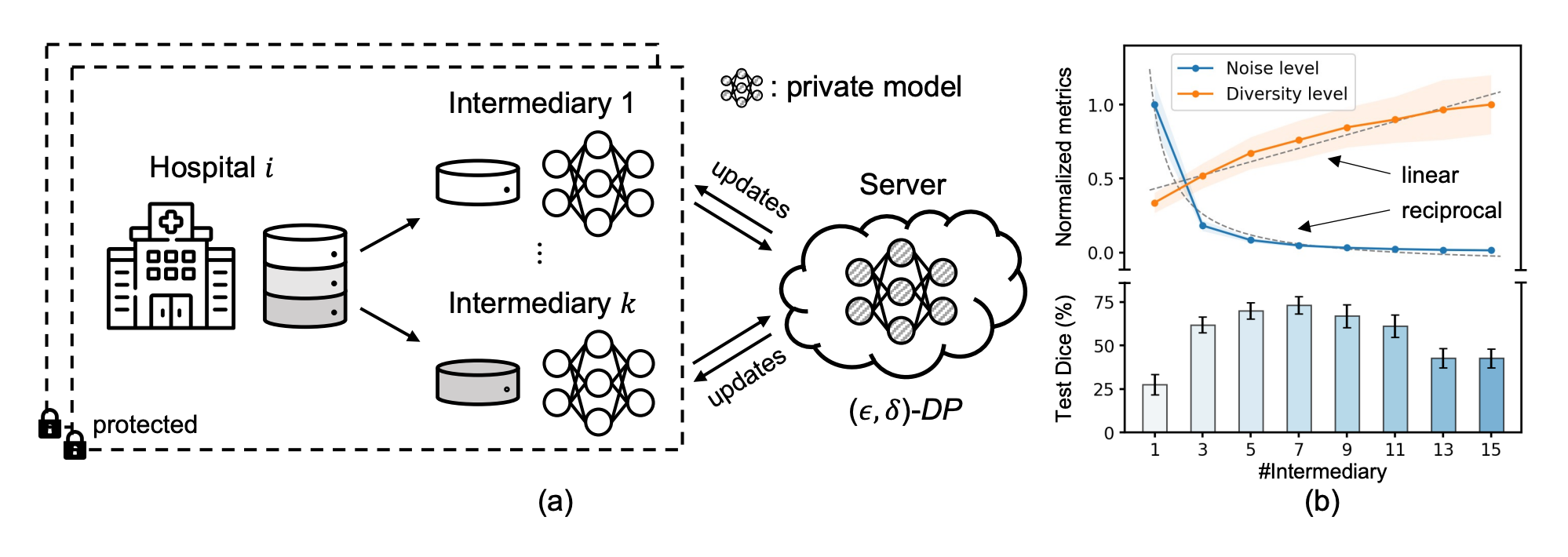

Client-Level Differential Privacy via Adaptive Intermediary in Federated Medical Imaging

Meirui Jiang, Yuan Zhong, Anjie Le, Xiaoxiao Li, Qi Dou

MICCAI 2023

- Proposes a framework that balances client-level differential privacy with federated training via an adaptive intermediary mechanism tailored to medical imaging applications.

- For a full overview of my scholarly work, visit my Google Scholar profile.